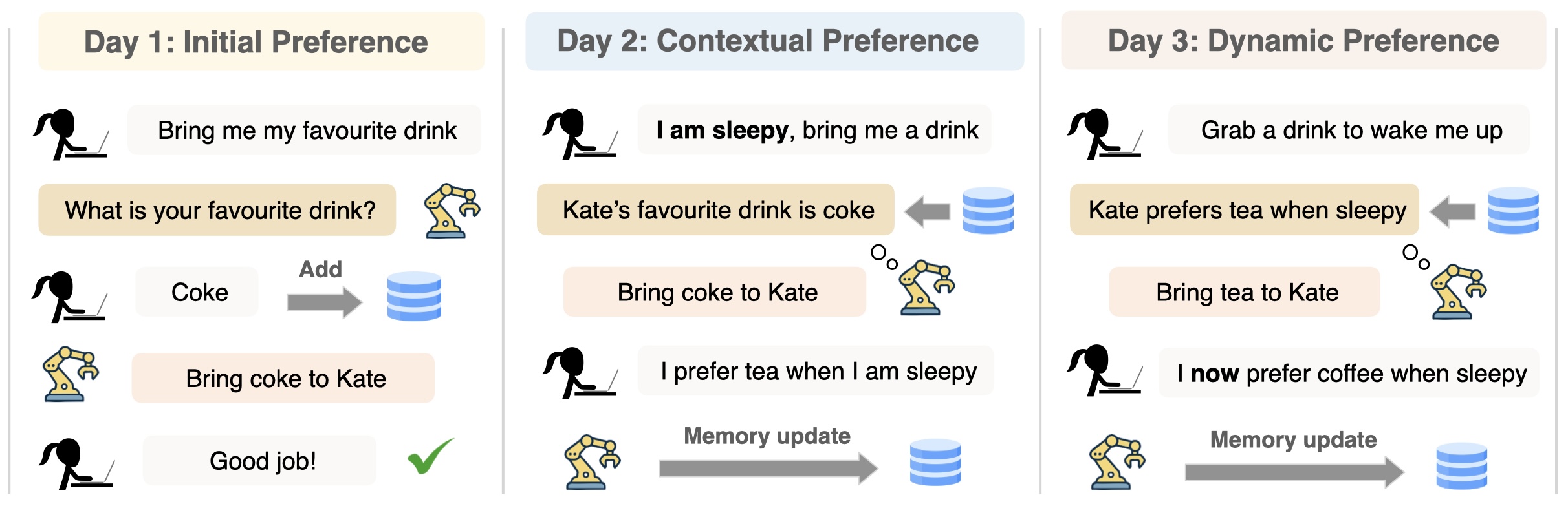

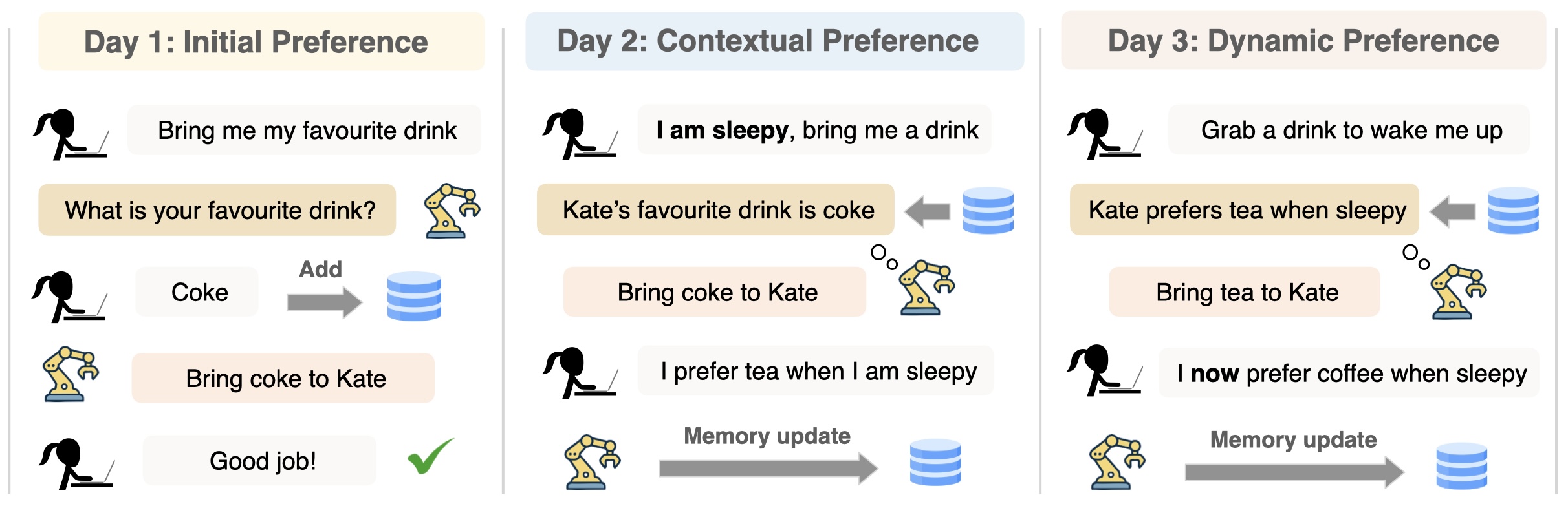

Learn initial preference → Learn context-dependent preferences → Adapt to preference shifts

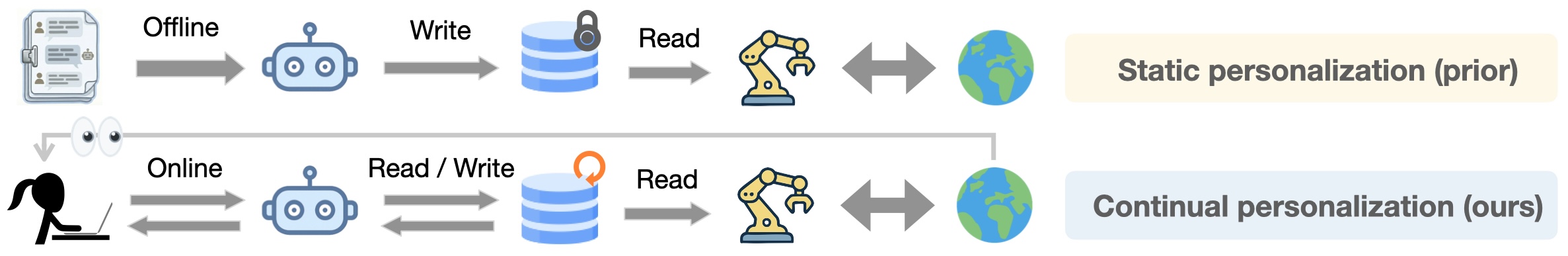

Modern AI agents are powerful but often fail to align with the idiosyncratic, evolving preferences of individual users. Prior approaches typically rely on static datasets, either training implicit preference models on interaction history or encoding explicit user profiles in external memory. However, these approaches struggle with new users and with preferences that change over time.

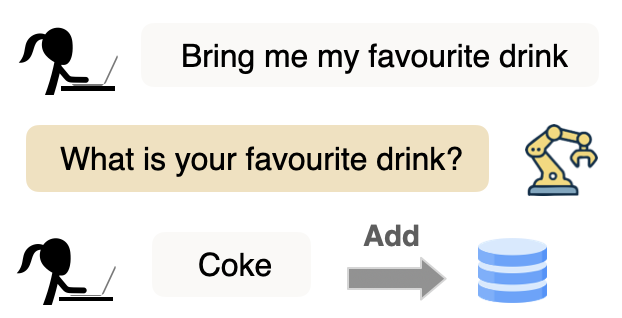

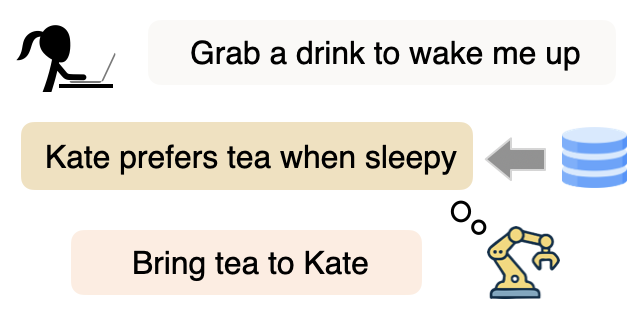

We introduce Personalized Agents from Human Feedback (PAHF), a framework for continual personalization in which agents learn online from live interaction using explicit per-user memory. PAHF operationalizes a three-step loop: (1) pre-action questions to resolve ambiguity under partial observability, (2) action execution guided by stored preferences, and (3) post-action feedback to update its memory when user preferences shift.

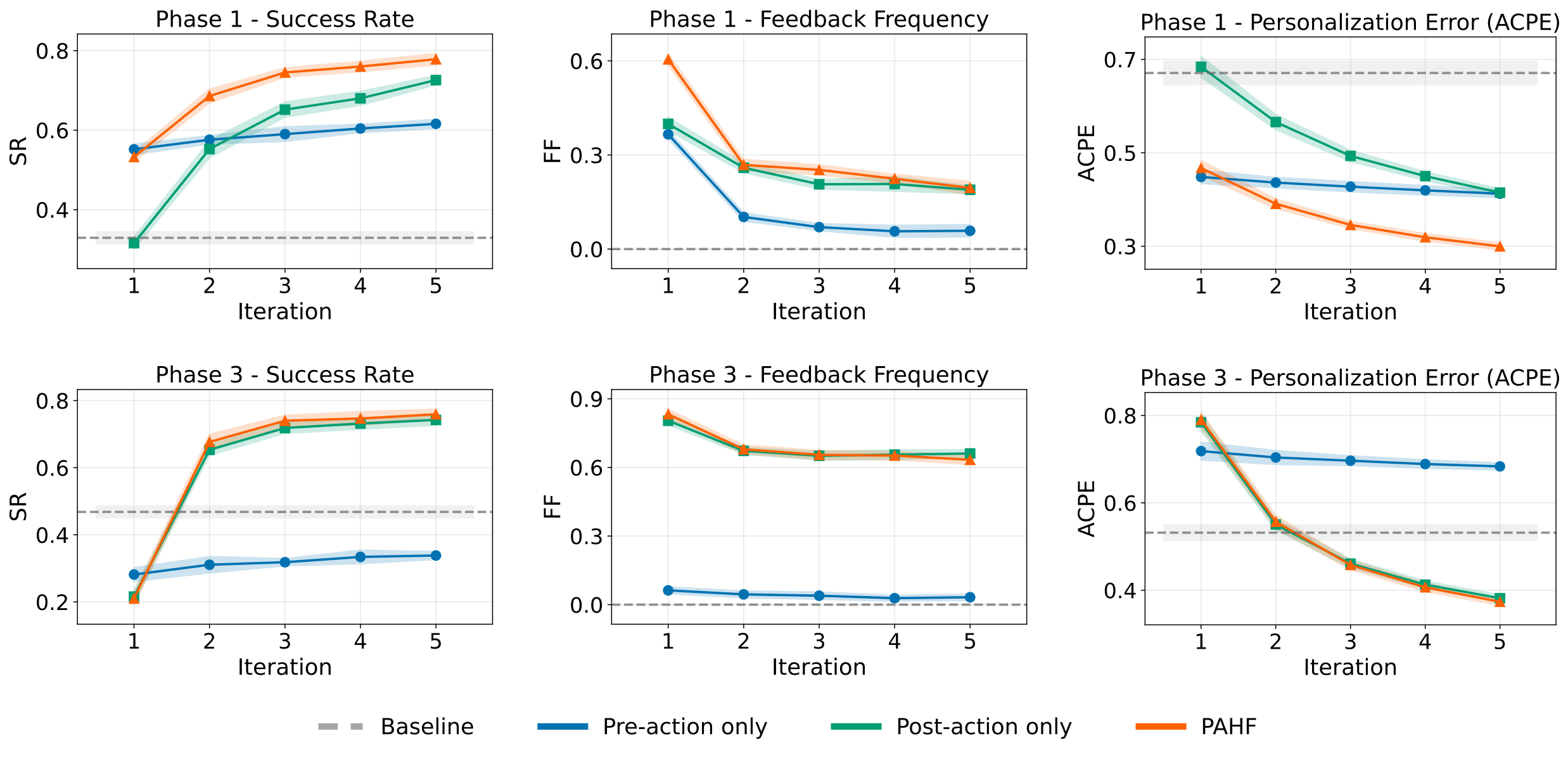

We evaluate PAHF using a four-phase protocol across embodied manipulation and online shopping to measure initial learning and adaptation to preference drift. Theoretical and empirical results confirm that combining explicit memory with dual feedback is critical: PAHF consistently outperforms baselines, minimizing initial errors and enabling rapid recovery from non-stationary preferences.

Learn initial preference → Learn context-dependent preferences → Adapt to preference shifts

Explicit per-user memory with two feedback channels: pre-action clarification and post-action correction.

Pre-action Interaction

Ask if preference ambiguous

Action Execution

Act using retrieved memory

Post-action Correction

Use feedback to correct and refresh stale memory.

Our framework enables continual personalization by leveraging online user feedback to dynamically read and write to memory, ensuring the agent adapts to evolving preferences.

Embodied manipulation

Home/office tasks with contextual preferences.

Online shopping

Multi-constraint product selection.

Training

Phase 1

Initial Learning

Testing

Phase 2

Initial Personalization

Training

Phase 3

Adaptation to Drift

Testing

Phase 4

Adapted Personalization

Learning curves for initial preference learning (Phase 1) and adaptation to preference shifts (Phase 3).

Test phase success rates

| Embodied | Shopping | |||

|---|---|---|---|---|

| Method | Phase 2 | Phase 4 | Phase 2 | Phase 4 |

| No memory | 32.3±0.4 | 44.8±0.5 | 27.8±0.2 | 27.0±0.4 |

| Pre-action only | 54.1±1.1 | 35.7±1.0 | 34.4±0.5 | 56.0±0.7 |

| Post-action only | 67.9±1.5 | 68.3±1.2 | 38.9±0.5 | 66.9±0.8 |

| PAHF (pre+post) | 70.5±1.7 | 68.8±1.3 | 41.3±0.8 | 70.3±1.1 |

@misc{liang2026learningpersonalizedagentshuman,

title={Learning Personalized Agents from Human Feedback},

author={Kaiqu Liang and Julia Kruk and Shengyi Qian and Xianjun Yang and Shengjie Bi and Yuanshun Yao and Shaoliang Nie and Mingyang Zhang and Lijuan Liu and Jaime Fernández Fisac and Shuyan Zhou and Saghar Hosseini},

year={2026},

eprint={2602.16173},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2602.16173},

}